Part I : Shrinking Neural Networks for Embedded Systems Using Low Rank Approximations (LoRA)

Motivation

As someone who implements deep learning models on embedded systems, an important consideration is often the size of the model.

There are several notions of size, but the two major ones are :

- Number of elements to save, usually in the form of a matrix or tensor.

- Number of operations to perform, usually in the form of a matrix multiplication.

The first affects the amount of memory required to store the model, while the second affects the amount of computation required to run the model.

I have worked extensively in matrix factorization before, mostly factorizing sparse matrices for recommendation systems.

Unfortunately, while there are many LoRA walk throughs using code, I was not able to find a simple succint explanation of the problem and the solution. And why it works. This article aims to address that gap by providing an elementary explanation of the problem, how to set up the optimization problem and how to solve it, in possibly linear time.

This is part I, that deals with factorizing a fully connected layer. Part II will deal with factorizing a convolutional layer.

Code Follow Along

The Jupyter Notebook for this is at https://github.com/FranciscoRMendes/tensor-rank

Introduction to Matrices

First we start with basic definitions of matrices and tensors.

If you are reading this article you probably know what a matrix is, but here is one anyway.

Again, consider a matrix multiplication with a vector,

see addendum for a tensor multiplication.

Size

Given the matrix above the main focus of this article will be to reduce the matrix size so that we never actually store all 12 elements of the matrix. With that said here is a more precise definition of how much space the matrix above takes on hardware memory.

In IEEE 754 single-precision format:

- 1 bit is used for the sign (positive or negative).

- 8 bits are used for the exponent.

- 23 bits are used for the significand (also called mantissa or fraction).

The total of 32 bits (or 4 bytes) is used to represent a single floating-point number.

So for this above matrix we need 12 memory locations or

While it is useful to think of tensors as a list of matrices, it is important to note that they have some important differences as mathematical objects. It is perhaps more useful to think of matrices as a “special case” of a tensor.

For this introduction, we will stick to matrices. In a following article, I will build the equivalent intuition but for tensors. However, I will provide points of equivalence between the two, wherever possible.

Number of Operations

For the given operation, we’re performing a matrix multiplication of a 3×43×4 matrix with a 4×14×1 column vector. The resulting matrix will be a 3×13×1 column vector.

To compute each element of the resulting vector, we perform the dot product of each row of the matrix with the column vector. Since each row has 4 elements, this involves 4 multiplications and 3 additions.

Therefore, for the entire operation:

- There are 3 rows in the matrix.

- For each row, there are 4 multiplications and 3 additions.

Hence, the total number of multiplications is 3×4=123×4=12, and the total number of additions is 3×3=93×3=9.

So, in total, there are 12 multiplications and 9 additions involved in the matrix-vector multiplication operation. For two matrices being multiplied of dimensions

Neural Networks as sequences of matrix/ tensor operations

A Neural Network is simply a sequence of tensor operations. In this section we will outline a simple neural network and illustrate this concept.

Input (3 nodes) –> Hidden Layer (2 nodes, ReLU) –> Output Layer (2 nodes, Sigmoid)

Hidden layer:

Output layer:

We can combine these equations into one :

In embedded systems its common to just feed an extra input of 1’s to the input and drop the biases. If you are familiar with matrix formulations of linear regression, you are probably familiar with this, but if not, you can see this clearly by the following,

Thus, after adding in the 1s to X and the column of biases to

This X and W are modified versions of the X, W mentioned before but we will stick to this notation for the rest of this article. You can generalize this to as many layers as you want.

Computational Complexity and Size

Recall that we already defined how much memory is occupied by a matrix. So our matrix

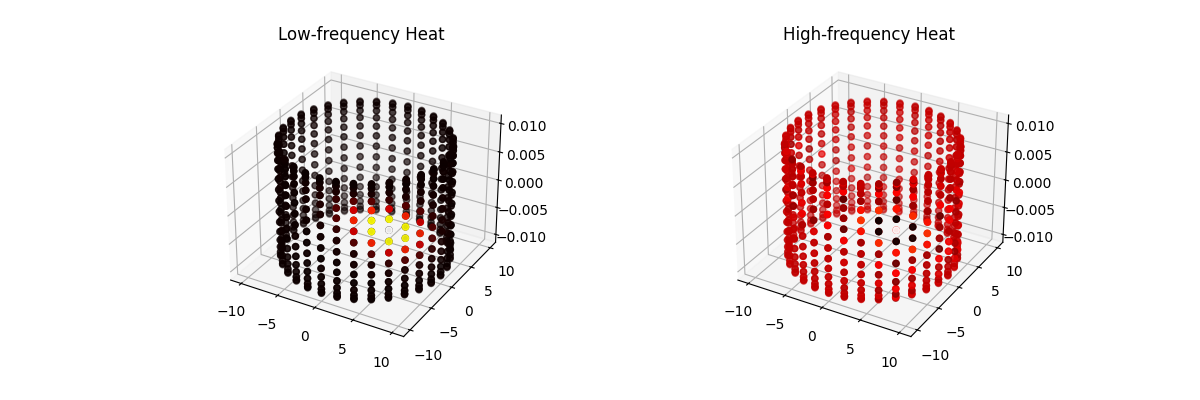

To give you a sense of how big this can be consider an input of 8192 FFT coefficients, and a second layer of size

For a signal/ image processing problem, usually

Since our input data is usually either images or signals of the order of

Simpler Problem Statement of Size Reduction

Let us start by trying to find a smaller matrix

Fortunately this is a very well defined problem in mathematics and can be solved by taking an SVD of

The more perceptive of you will have noticed that we have two options to replace the matrix

Size

First, let’s analyze the two in terms of size. The size of

Where

How much space did we save?

Recall,

We can in fact, place an upper bound on

Where usually this upper bound is never tight. In our example, this value is ,

Usually, we aim to reduce this number by 50% or more i.e. a rank of around 64. But in many of

our use cases we see ranks of the order

Multiplication

If the concept of size is clear, then it immediately becomes clear why the number of

multiplications also reduces.

When multiplying

Formulating The Indirect Optimization Problem

Okay so we know what to do, we need to find an

I will explain later what the “indirect” means.

The new activations are

For a given metric,

Minimize

Now we can use any classification metric,

Thus, we need to find the smallest

- The problem is easily interpret-able now, find the smallest

- Second, we

Optimization Algorithm

One obvious way to do this would be a brute force approach, that is, to simply start at

There is an easier way to achieve this, but the proof is left as an exercise for the reader. Hint : study the properties of the optimization

algorithm at

Formulating the Direct Optimization Problem

The indirect optimization problem is a purely mathematical problem that does not “know” that the matrix comes from a neural network.

Now we know that the matrix comes from a neural network, we can use this information to our advantage.

Recall, the general formulation of a neural network,

We can use the fact that the matrix comes from a neural network to our advantage. We can use the loss function of the neural network to directly optimize the matrices

Here, we must be careful to change the notation slightly. We will denote the matrices

But these are not necessarily the matrices that come from the SVD of

The reason for this is that the neural network learns the best

This is a subtle but important distinction. This is the approach that LoRA uses.

LoRA optimization, where U,S and V are defined for a suitable choice of

However, there are some important disadvantages to this approach. Let us start with the advantages,

- Easy to understand, we want low rank matrices instead of our first layer, so why not start with them and optimize them directly.

- The optimization problem is now a neural network optimization problem, which is a well studied field.

- Training is much faster than if you trained on a full rank matrix in the first layer. This is important if you want to run many trials with other hyperparameters.

- Binary search as known issues.

Disadvantages, - You need to know the rank of the matrix you are looking for. This is not always easy to know.

- If you want to find the rank you need to run the optimization problem for every rank you want to try.And this optimization problem is more expensive than the one above.

- The optimization problem is non-convex, so you may not find the global minimum for the delta you want. Randomness between runs could result in a different weight matrix every time.

Conclusion

For us a neat work around was to get a sense of a usable rank by running the indirect optimization problem and then using that rank to run the direct optimization problem. This way we could get a good rank in a reasonable amount of time.

Let us recap some of the important concepts we have learned in this article.

- The size of a matrix is the number of elements it has.

- The number of operations in a matrix multiplication is the number of multiplications and additions involved.

- The rank of a matrix is the number of linearly independent rows or columns it has.

- The SVD of a matrix is a way to factorize it into three matrices.

- The low rank approximation of a matrix is a way to approximate it with a smaller matrix.

- The low rank approximation of a matrix can be found by taking the SVD of the matrix and choosing the largest singular values.

- There are two ways to find the low rank approximation of a matrix: the indirect optimization problem and the direct optimization problem.

References

- LoRA: Low-Rank Adaptation of Large Language Models

- Low Rank Approximation of a Matrix

- Singular Value Decomposition

- Matrix Multiplication

Addendum

Tensor multiplication is a generalization of matrix multiplication. In tensor multiplication, the order of the tensors matters. For example, the product of a 3×4×2 tensor with a 2×4×1 tensor is a 3×4×1 tensor. The number of multiplications and additions involved in tensor multiplication is the same as in matrix multiplication, but the dimensions of the tensors are different. For example, the product of a 3×4×2 tensor with a 2×4×1 tensor involves 3×4×2=243×4×2=24 multiplications and 3×3=93×3=9 additions.

Example of tensor multiplication of two tensors

Tensor

Tensor

Resulting Tensor

The calculations are as follows:

$$

\begin{align*}

C[0,0,0] &= 11 + 20 = 1 + 0 = 1 \

C[0,0,1] &= 10 + 21 = 0 + 2 = 2 \

C[0,1,0] &= 31 + 40 = 3 + 0 = 3 \

C[0,1,1] &= 30 + 41 = 0 + 4 = 4 \

C[1,0,0] &= 51 + 60 = 5 + 0 = 5 \

C[1,0,1] &= 50 + 61 = 0 + 6 = 6 \

C[1,1,0] &= 71 + 80 = 7 + 0 = 7 \

C[1,1,1] &= 70 + 81 = 0 + 8 = 8 \

\end{align*}

$$