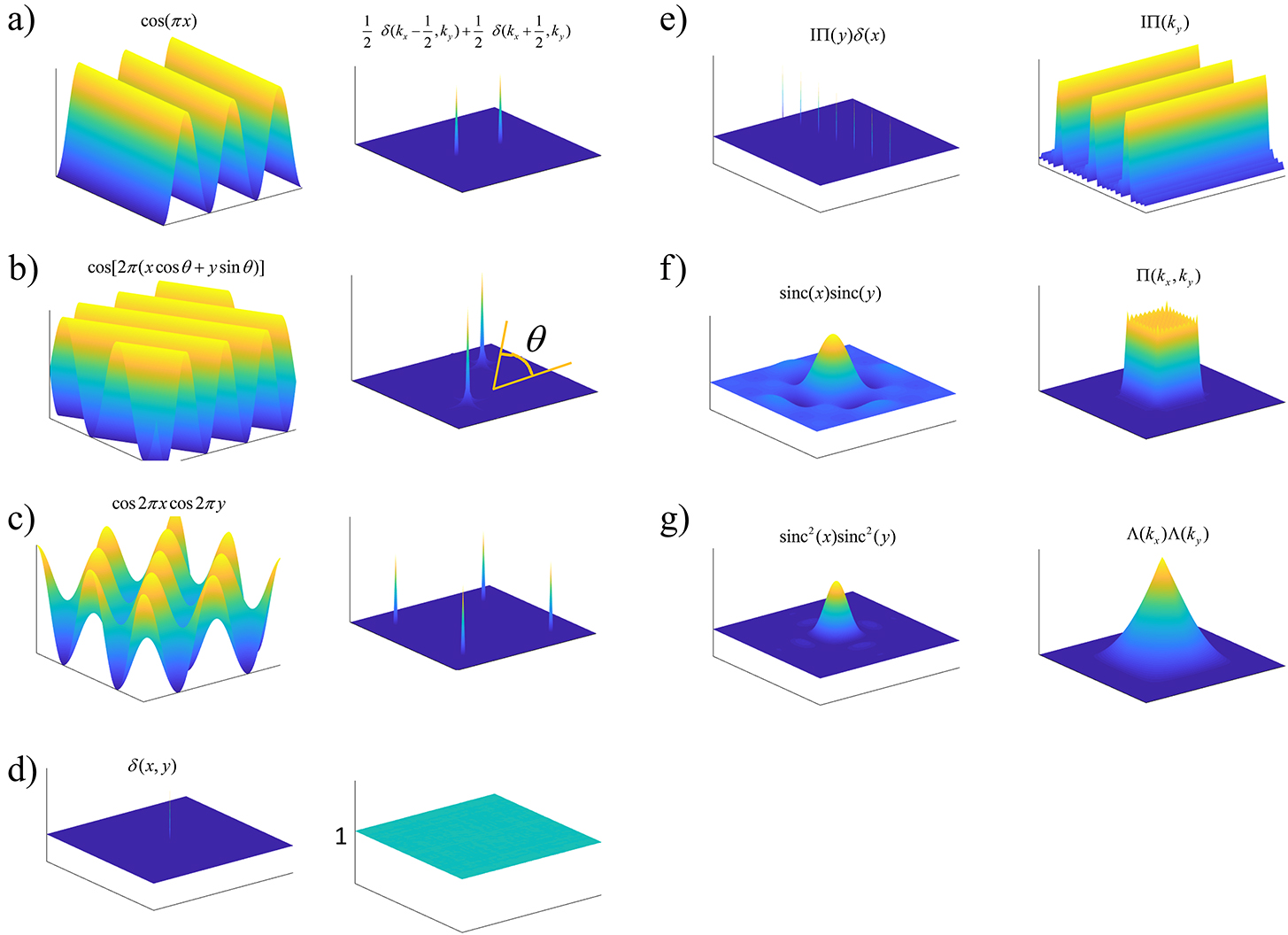

Locality, Learning, and the FFT: Why CNNs Avoid the Fourier Domain

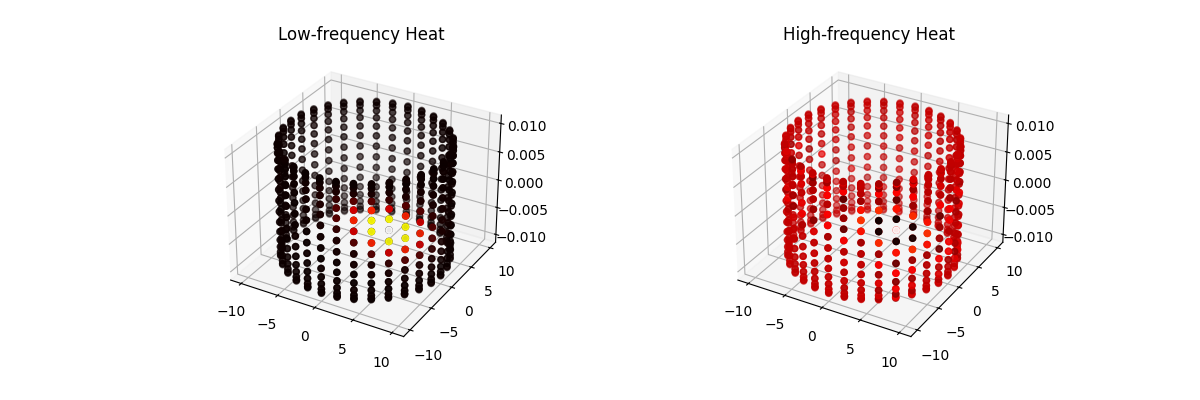

Explore how Fourier transforms on graphs power spectral GCNs, from modeling heat flow to solving cold-start recommendations.

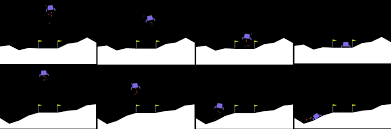

Read moreSoft Actor Critic (Visualized) Part 2: Lunar Lander Example from Scratch in Torch

From scratch implementation of the SAC algorithm in PyTorch for Lunar Lander.

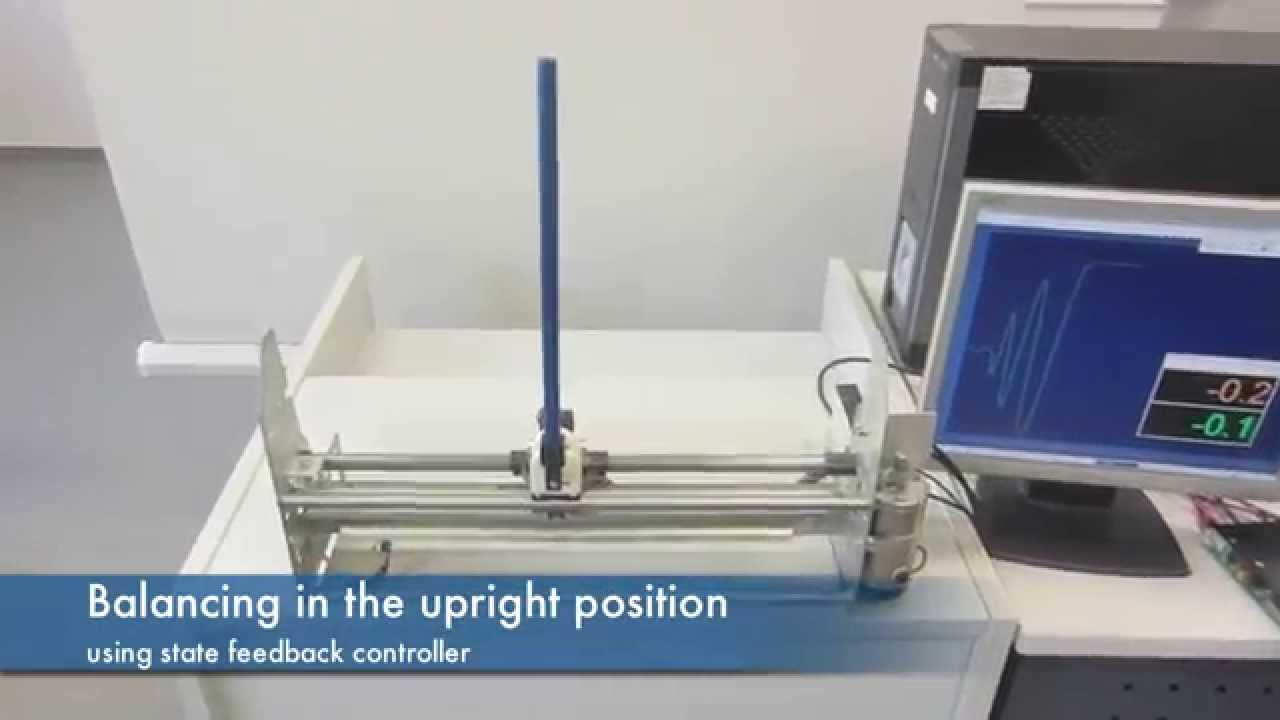

Read moreSoft Actor Critic (Visualized) : From Scratch in Torch for Inverted Pendulum

From scratch implementation of the SAC algorithm in PyTorch for Inverted Pendulum.

Read moreA review of the mathematical essentials for recommender systems, comparing matrix factorization and graph neural networks.

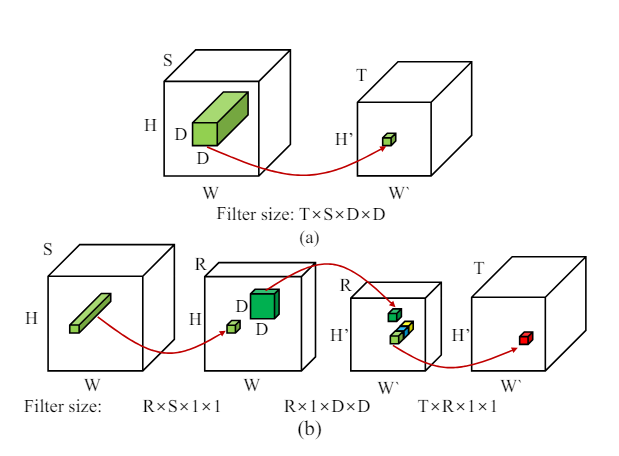

Read morePart III : What does Low Rank Factorization of a Convolutional Layer really do?

In this post, we will explore the Low Rank Approximation (LoRA) technique for shrinking neural networks for embedded systems. We will focus on the Convolutional Neural Network (CNN) case and discuss the rank selection process.

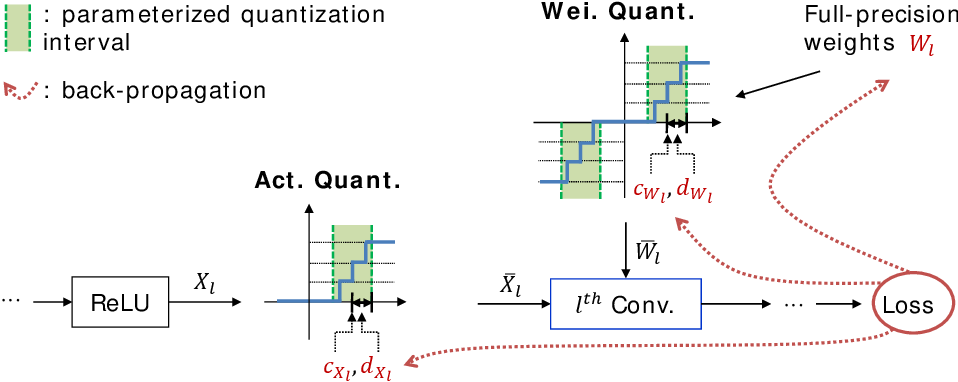

Read moreAre Values Passed Between Layers Float or Int in PyTorch Post Quantization?

In this article, we will discuss how values are passed between layers post quantization in PyTorch. We will also discuss why floating point operations are slower than integer operations.

Read moreA Manual Implementation of Quantization in PyTorch - Single Layer

A manual implementation of quantization in PyTorch.

Read morePart II : Shrinking Neural Networks for Embedded Systems Using Low Rank Approximations (LoRA)

In this post, we will explore the Low Rank Approximation (LoRA) technique for shrinking neural networks for embedded systems. We will focus on the Convolutional Neural Network (CNN) case and discuss the rank selection process.

Read morePart I : Shrinking Neural Networks for Embedded Systems Using Low Rank Approximations (LoRA)

An elementary explanation of the problem with full rank matrices in neural networks and their solution via low rank approximations. Detailed explanation on how to set up the optimization problem and how to solve it, in possibly linear time.

Read more